Where AI’s Future Is Being Built

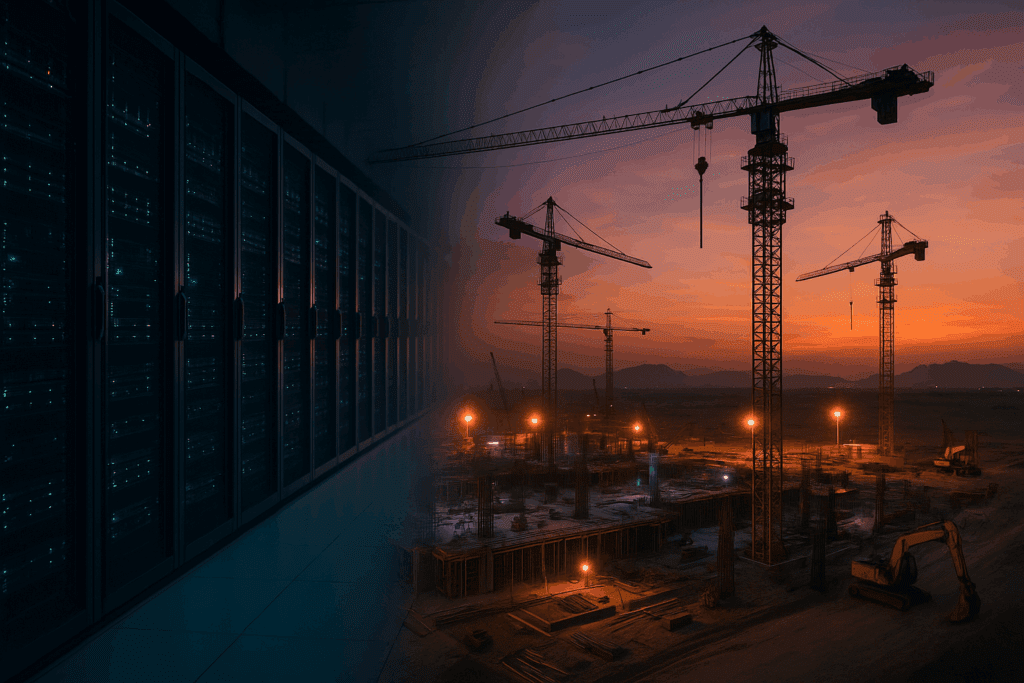

Have you ever watched a massive server rack hum softly in a dim data-hall and thought: “Here is the future”? I did—reading about a new AI “Zone” under construction in Saudi Arabia and realizing that the big leaps in AI today aren’t just about smart chatbots—they’re about where, how, and by whom the compute happens.

This isn’t just hype. It’s infrastructure. It’s models. And it’s strategy.

Key Developments & Trends in AI

1. Company Moves & Infrastructure

- Amazon Web Services (AWS) and HUMAIN (Saudi Arabia’s newly-formed national AI company) have announced a $5 billion+ investment to build a dedicated “AI Zone” in the Kingdom. The zone will include AWS’s AI infrastructure, UltraCluster networks for training and inference, and talent-and-startup programs. (About Amazon)

- Why this matters: It underscores that the AI race isn’t just about flashy models—it’s about where compute and data live, and who controls them.

- Counterpoint: Big money doesn’t guarantee immediate competitive capability — building talent, aligning algorithms with real business use-cases, and execution remain significant hurdles.

- Qualcomm Incorporated has launched the AI200 and AI250 — high-end data-center inference accelerator chips aimed at large / multimodal models. (Tom’s Hardware)

- Why it stands out: Inference at scale (running models, serving them efficiently) is becoming one of the biggest bottlenecks. Efficient chips + infrastructure will matter.

- Watch-out: Hardware is only one piece of the puzzle; software stack, ecosystem support, model adaptation and real-world integration still need to catch up.

- Public funding: Agencies like the National Science Foundation continue to invest heavily in AI research, workforce development and societal implications (this is more of a trend than a specific single news item this week).

- Why good: It signals AI is being institutionalized, not just hype.

- Caveat: Research funding is foundational but by itself doesn’t guarantee commercial success or immediate deployment.

2. Advances in Models & Modalities

- We are seeing a shift: rather than only chasing ever-bigger generalist language models, there’s increasing emphasis on efficiency, specialization and multimodal capabilities. For example, models that handle image + text + audio, or domain-specific summarization.

- Implication for builders: Smaller, purpose-built models might beat giant monoliths in specific use-cases.

- Trade-off: Narrow models may require more maintenance and lack the flexibility of bigger models.

- Regional & alternative players are stepping up: the French company Mistral AI has released open-weight reasoning models emphasizing performance and cost-effectiveness (though I did not pull a direct link here). The point: Not only U.S./China matter.

- But: Open-weight means you might need to self-host or handle infrastructure yourself; ecosystem may be less mature.

- Multimodal/agent style models: The model family Gemini from DeepMind (Google) is reported to include reasoning, coding, audio, image and multimodal capabilities.

- Why interesting: If models can handle input from video, text, audio and generate richer output, that opens up new creative and workflow-use cases.

- Caveat: Many models look good in demos — real-world robustness, integration costs, domain fine-tuning, and cost/time still remain big challenges.

3. Ecosystem & Emerging Technologies

- As the World Economic Forum pointed out in its “Top 10 Emerging Technologies 2025” list, the convergence of AI + biotech, AI + sustainable materials, robotics + AI is becoming increasingly important.

- Takeaway: Don’t just focus on “chatbots” or “LLMs” — think about how AI intersects with other domains (materials, biotech, energy, robotics).

- Watch-out: These convergent domains often have longer time-horizons and higher risk.

- Neuromorphic computing (hardware inspired by brains/neural architecture) is flagged as a major frontier for efficiency and capability-leap.

- Why: As models grow larger, cost, power consumption, memory/bandwidth constraints become significant — hardware innovation matters.

- But: Much of this is still experimental; commercial maturity is early.

- “Agentic AI” (systems that don’t just respond to prompts but reason, plan, act) is gaining more attention.

- Positive: That kind of capability aligns with workflow automation, summarization, intelligent assistants.

- Risk: Autonomy means oversight, error-risk, liability and governance become critical concerns.

4. Geopolitics, Regulation & Market Dynamics

- Regional competition: Countries like Saudi Arabia, UAE, China are ramping up AI infrastructure and talent development. For example, Saudi Arabia’s pivot via HUMAIN shows the Middle East is seriously entering the AI “group chat.” (WIRED)

- Implication: If you build global AI systems or rely on model/services internationally, you’ll need to watch export/regulation/local-data issues, localization, sovereignty.

- Counterpoint: While regional advantage matters, global interoperability and data flows remain complex.

- Regulation and governance: “AI governance” is no longer optional — transparency, bias mitigation, auditability, regulatory compliance will become business-operational.

- Good: Opens up opportunity for services/tools in governance, monitoring, certification.

- Challenge: If you build without thinking governance in, you may face problems down the road.

- Model size / performance plateau: Analysts argue that simply making models bigger may yield diminishing returns — smarter fine-tuning, retrieval-augmented models, domain-specific models may offer better ROI.

- Insight: For startups or domain-users, instead of chasing the biggest model, ask: “Which model + domain data + integration strategy gives me value?”

Important Caveats & Risks

- Hype vs Reality: Many announcements are impressive but real-world deployment still has friction: data quality, infrastructure cost, latency, reliability.

- Compute & cost: Even though models become more efficient, training/inference at scale still has cost and operational complexity. If you build a product relying heavily on very large models, think about cost economics early.

- Specialization vs Generalization trade-off: Smaller specialized models may outperform in narrow domains — but if your product needs to expand, you may find limitations.

- Governance, Ethics, Regulation: As you deploy AI, issues like data privacy, bias, explainability, liability become important — ignoring them is risky.

- Market saturation / differentiation: Many startup ideas now include “AI summarization”, “retrieval”, “agents” — you’ll need a distinctive angle (domain expertise, vertical focus, unique data) to stand out.

Wrap-Up: What Should You Do Next?

If you’re not already exploring how AI can integrate into your workflows or product, you may already be behind.

At Vengo AI, monitoring how inference hardware changes, how infrastructure deals shift regionally, how models evolve will guide how you position your platform.

The key question: Am I asking not only “what model can I use”, but “what infrastructure, what domain-fit, what cost, what governance” go with it?

Because the risk isn’t in trying AI—it’s in standing still.